At its 2022 GTC conference this week, Nvidia today announced the start of production of its Drive Orin autonomous vehicle computer, showcased new automakers adopting its Drive platform, and unveiled the next generation of its Drive Hyperion architecture.

The GPU (graphical processing unit) and AI (artificial intelligence) pioneer says that its Drive Hyperion with Orin serves as the central nervous system and AI brain for new energy vehicles (NEVs), delivering constantly improving, cutting-edge AI features while ensuring safe and secure driving capabilities.

“Future cars will be fully programmable, evolving from many embedded controllers to powerful centralized computers—with AI and AV functionalities delivered through software updates and enhanced over the life of the car,” said Jensen Huang, Founder and CEO of Nvidia. “Nvidia Drive Orin has been enormously successful with companies building this future and is serving as the ideal AV and AI engine for the new generation of EVs, robotaxis, shuttles, and trucks.”

Drive adoption grows

Since last April, Nvidia says its total automotive-design-win pipeline has increased from $8 billion to more than $11 billion over the next six years, from the top EV makers to top automakers like Jaguar Land Rover, Mercedes-Benz, and Volvo Cars, to leading trucking companies including Plus, TuSimple, and Volvo Autonomous Solutions, and cutting-edge robotaxi manufacturers AutoX, DiDi, Pony.ai, and Zoox.

At GTC, Chinese EV leader BYD and California EV startup Lucid Group announced they are adopting Nvidia Drive for their next-generation fleets. In addition, NEV startups such as Nio, Li Auto, XPeng, SAIC’s IM Motors and R Auto brands, JiDU, Human Horizons, VinFast, and WM Motor are developing software-defined fleets on Drive. With these latest announcements, Nvidia says that Drive Orin has become the choice AI compute platform for 20 of the top 30 passenger electric vehicle makers in the world.

BYD will use the Nvidia Drive Hyperion computing architecture in its NEVs for automated driving and parking starting in the first half of 2023. The automaker says that the cooperation is an important joint effort in the production and deployment of environmentally sustainable NEVs that become increasingly intelligent with over-the-air software updates.

“Software-defined autonomy and electrification are some of the driving forces behind the automotive industry’s transformation,” said Rishi Dhall, Vice President of Automotive, Nvidia. “Through the power of AI and the Nvidia Drive platform, BYD will deliver software-defined EV fleets that are not only safe and reliable but also improve over time.”

BYD’s NEVs will feature the Orin SoC (system on chip) as the centralized compute and AI engine for automated driving and intelligent cockpit features. Orin delivers 254 TOPS (trillions of operations per second) of compute performance and features high-speed peripheral interfaces and high memory bandwidth of 205 GB/s to handle data from multiple sensor configurations with ISO 26262 ASIL-D certification.

In China, BYD has dominated the EV sales charts with industry-leading technologies such as the Blade battery. It sold nearly 600,000 electric passenger vehicles in 2021, with cumulative production and sales volume of EVs of over 1.5 million units.

At GTC, Lucid revealed that its DreamDrive Pro advanced driver-assistance system (ADAS) is built on Drive Hyperion so that the scalable, software-defined platform ensures that its vehicles are receiving continuous improvements over the air.

Standard on Dream Edition and Grand Touring trims and optional on other models, DreamDrive Pro uses a suite of 14 cameras, one lidar, five radars, and 12 ultrasonics for automated driving and intelligent cockpit features. Lucid’s system offers dual-rail power and a proprietary Ethernet Ring for a high degree of redundancy for key systems such as braking and steering.

“The seamless integration of the software-defined Nvidia Drive platform provides a powerful basis for Lucid to further enhance what DreamDrive can do in the future—all of which can be delivered to vehicles over the air,” said Mike Bell, Senior Vice President, Digital, Lucid Group.

Together, Lucid and Nvidia will support these intelligent vehicles, enhancing the customer experience with new functions throughout the life of the car.

The next Hyperion

At his GTC keynote, Huang announced that the next generation of the Drive Hyperion architecture built on the company’s new Atlan computer will start shipping for 2026 vehicle production. The company describes the Hyperion as the nervous system of a vehicle and Atlan as the brain.

With the same computer form factor and Nvidia Drive Works APIs (application programming interfaces), Hyperion 9 is designed to scale across generations so customers can leverage current investments for future architectures.

The platform will increase performance for processing sensor data to enhance safety and extend the operating domains of greater autonomy. Multiple Atlan computers will enable intelligent driving and in-cabin functionality, with the platform including the sensor set and full Drive Chauffeur and Concierge applications.

With the Atlan SoC, the platform features more than double the performance of the current Orin-based architecture within the same power envelope. It is capable of handling SAE Level 4 autonomous driving and the convenience and safety features of Concierge.

Atlan fuses all of Nvidia’s technologies in AI, automotive, robotics, safety, and BlueField data centers. Leveraging Nvidia’s high-performance GPU architecture, Arm CPU cores, and deep learning and computer vision accelerators, it provides ample compute power for redundant and diverse deep neural networks and leaves headroom for developers to continue adding features and improvements.

With Atlan compute performance, Hyperion 9 can process more sensor data, improving redundancy and diversity. The upgraded sensor suite includes surround imaging radar, enhanced cameras with higher frame rates, two additional side LiDARs, and improved undercarriage sensing with better camera and ultrasonic placements.

In total, the architecture can accommodate 14 cameras, nine radars, three LiDARs, and 20 ultrasonics for automated and autonomous driving, as well as three cameras and one radar for interior occupant sensing.

Multi-modal mapping engine

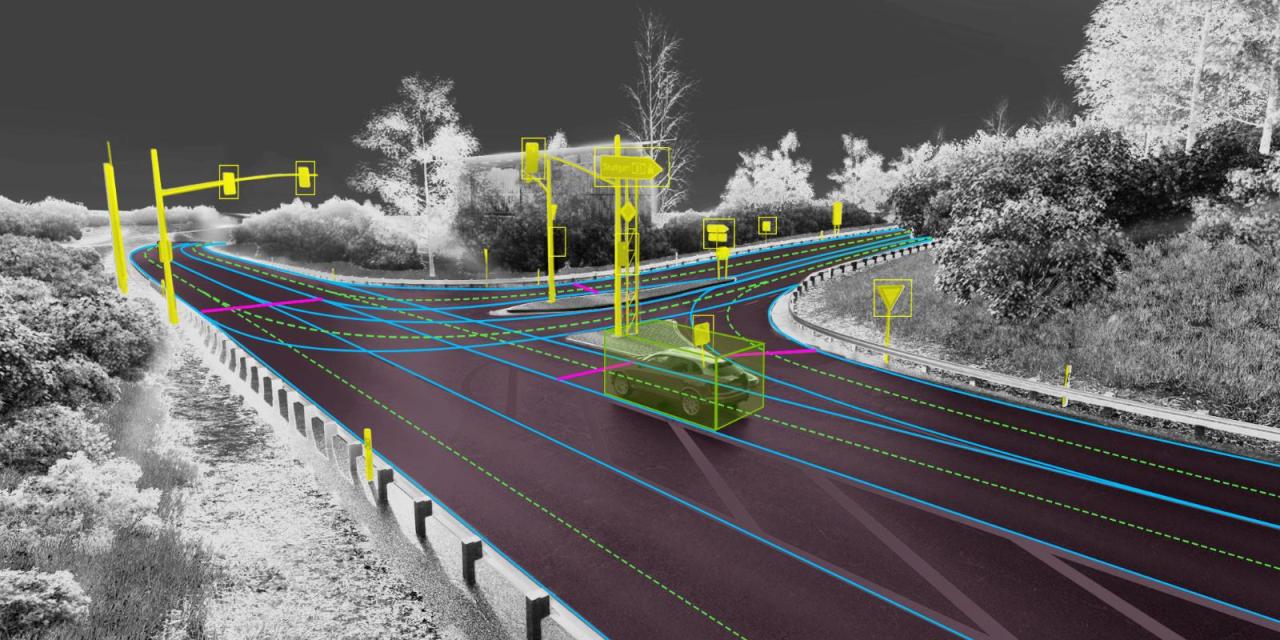

Nvidia aims to accelerate Deployment of Level 3 and Level 4 autonomy with a mapping fleet to survey 500,000 km (310,000 mi) worldwide by 2024 and by creating an Earth-scale digital twin with centimeter-level accuracy.

At his GTC keynote, Huang introduced Nvidia Drive Map, a multimodal mapping platform designed to enable the highest levels of autonomy by combining the accuracy of DeepMap survey mapping with the newness and scale of AI-based crowdsourced mapping.

Drive Map will provide survey-level ground-truth mapping coverage of roadways in North America, Europe, and Asia. The map will be continuously updated and expanded with data from millions of passenger vehicles.

With three localization layers from the camera, LiDAR, and radar sensors, the company believes that it provides the redundancy and versatility required by the most advanced AI drivers.

The camera layer consists of map attributes such as lane dividers, road markings, road boundaries, traffic lights, signs, and poles.

The radar layer is an aggregate point cloud of radar returns, which is particularly useful in poor lighting conditions, which are challenging for cameras, and in poor weather conditions, which are challenging for cameras and lidars. Radar localization is also useful in suburban areas where typical map attributes aren’t available, enabling the AI driver to localize based on surrounding objects that generate a radar return.

The LiDAR layer provides the most precise and reliable representation of the environment. It builds a 3D representation of the world at 5-cm (2-in) resolution, an accuracy that Nvidia says is impossible to achieve with cameras and radars.

Once localized to the map, the AI can use the detailed semantic information provided by the map to plan ahead and safely perform driving decisions.

Nvidia says that the approach is unique in combining two map engines—ground truth survey map engine and crowdsourced map engine.

The ground truth portion is based on the DeepMap survey map engine developed and verified over the past six years. The AI-based crowdsource engine gathers map updates from millions of cars, continuously uploading new data to the cloud as the vehicles drive. The data are then aggregated, loaded to Nvidia Omniverse, and used to update the map, providing the real-world fleet with fresh over-the-air map updates within hours.

Drive Map also provides a data interface, Drive MapStream, to allow any passenger car that meets requirements to continuously update the map using the camera, radar, and LiDAR data.

In addition to assisting the AI to make the optimal driving decisions, Drive Map accelerates AV deployment. The workflows are centered on Omniverse, where real-world map data is loaded and stored. Nvidia’s Omniverse maintains an Earth-scale representation of the digital twin that is continuously updated and expanded by survey map vehicles and millions of passenger vehicles.

Using automated content generation tools built on Omniverse, the detailed map is converted into a drivable simulation environment that can be used with Drive Sim to accurately replicate features such as road elevation, road markings, islands, traffic signals, signs, and vertical posts.

With physically-based sensor simulation and domain randomization, AV developers can use the simulated environment to generate training scenarios that aren’t available in real data. They can also apply scenario-generation tools to test AV software on digital-twin environments before deploying AVs in the real world.

The digital twin provides fleet operators a virtual view of where the vehicles are driving in the world, assisting remote operation when needed.

- Lucid DreamDrive Pro uses Nvidia Drive Hyperion.

- BYD is adopting Nvidia Drive.

- Nvidia Drive Hyperion 9.

- Nvidia Drive Map.

- Nvidia Drive Map at night.