Today, Nvidia kicks off its latest GTC event with a keynote from company Founder and CEO Jensen Huang.

While the conference is for the broad base of AI innovators, technologists, and creators, once again there is a lot of automotive news to digest. In a pre-brief for media, Danny Shapiro, Vice President, Automotive at Nvidia, gave a rundown.

Drive Hyperion 8 architecture

The top news was the announcement of Drive Hyperion 8, a compute architecture and sensor set for full self-driving systems. The latest generation technology, designed for the highest levels of functional safety and cybersecurity, is supported by sensors from leading suppliers such as Continental, Hella, Luminar, Sony, and Valeo. It is available now for 2024 vehicles, Huang announced at GTC.

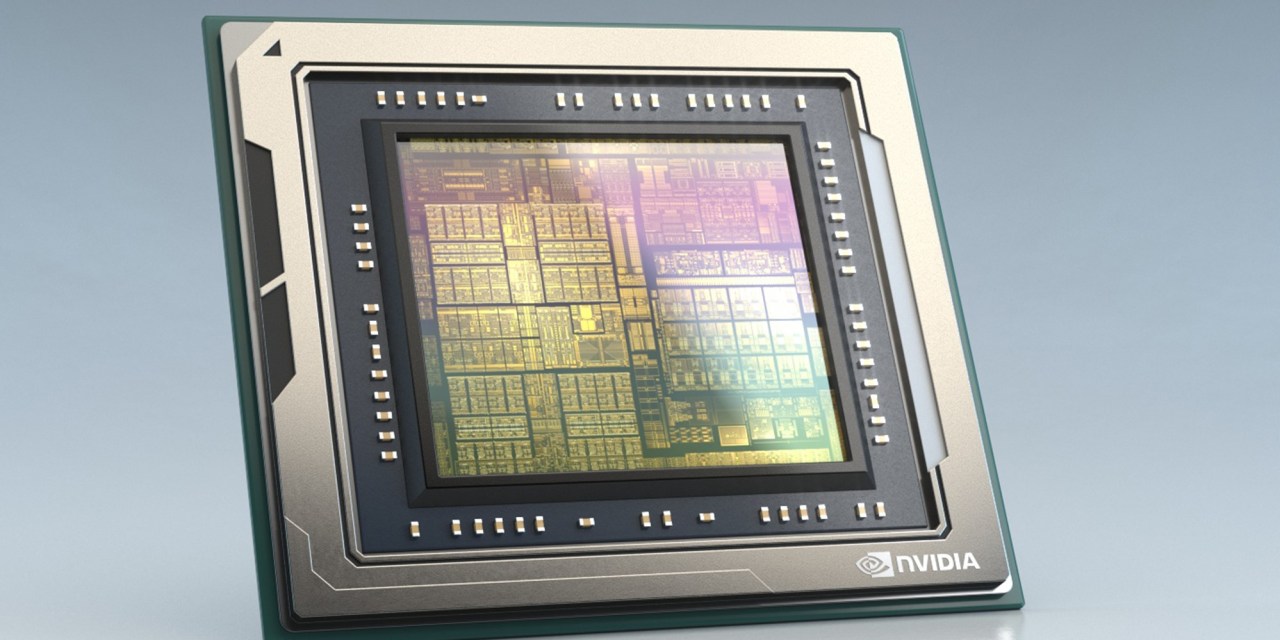

“Hyperion enables high-fidelity sensing redundancy and fail-over safety with ample computing power and programmability, which is key,” said Shapiro. “That enables the system to get better and better with each over-the-air update. All of this is processed by two of our newest Drive Orin SOCs delivering a total of more than 500 TOPS.”

The production platform is designed to be modular so customers can use what they need, from core compute and middleware to NCAP (New Car Assessment Program), SAE Level 3 driving, Level 4 parking, and AI cockpit capabilities. It is also scalable, with the current standard Drive Orin SOC (system on chip) designed to be upgradeable with Drive Atlan and DriveWorks APIs that are compatible across generations.

The Hyperion architecture has a functional-safety AI compute platform at its core, providing autonomous vehicle developers two Orin SOCs for redundancy and fail-over safety. It is said to have ample compute for Level 4 self-driving and intelligent cockpit capabilities.

The Drive AV’s software contains DNNs (deep neural networks) for perception, mapping, planning, and control. The Hyperion 8 developer kit includes Ampere architecture GPUs, the high-performance compute delivering ample headroom to test and validate new software capabilities.

Nvidia’s DriveWorks sensor abstraction layer streamlines sensor setup with easy-to-use plugins. Hyperion’s base sensor suite features 12 cameras, 9 radars, 12 ultrasonics, and 1 front-facing LiDAR sensor, but AV suppliers can customize the platform to their individual self-driving solutions.

The open, flexible ecosystem ensures developers can test and validate their technology on the exact hardware that will be on the vehicle. For instance, developers have access to Luminar’s long-range Iris sensor to perform front-facing Lidar development.

“Nvidia has led the modern compute revolution, and the industry sees them as doing the same with autonomous driving,” said Austin Russell, Founder and CEO of Luminar. “The common thread between our two companies is that our technologies are becoming the de facto solution for major automakers to enable next-generation safety and autonomy. By taking advantage of our respective strengths, automakers will have access to the most advanced autonomous vehicle development platform.”

The radar suite includes Hella short-range and Continental long-range and imaging radars for redundant sensing. Sony and Valeo cameras provide cutting-edge visual sensing, and ultrasonic sensors from Valeo can measure close-object distance.

The entire toolset is synchronized and calibrated for 4D data collection, giving developers valuable time back in deploying safe and robust AVs.

From DeepMap to Drive Mapping

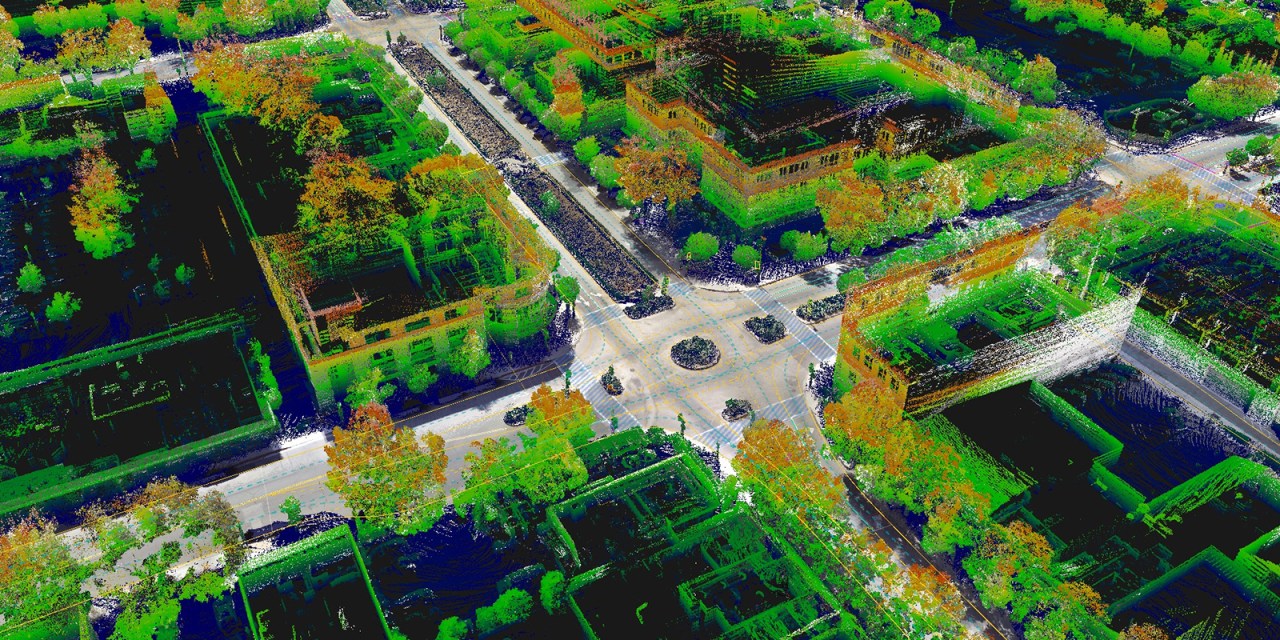

Recent Nvidia acquisition, mapping company DeepMap, plays a starring role in Drive Mapping, which leverages perception results from vehicles running Drive Hyperion 8.

Drive Mapping includes both camera and radar localization layers in every region that is mapped for AI-assisted driving capabilities. Radar provides a layer of redundancy for localizing and driving in poor weather and lighting conditions where cameras may be blinded. To improve reliability and accuracy, the networks are trained on ground-truth maps.

“Mapping is a critical pillar of driving,” explained Shapiro. “Having this ground truth is, in essence, an additional sensor on the vehicle. Survey mapping is created by our dedicated mapping vehicles. We have a fleet to survey map the most popular areas in the world. And survey mapping primes the map before a fleet of our customer’s cars are even launched and serve as the ground truth to teach our mapping AI models.”

As a crowdsourced platform, Drive Mapping coverage grows along with the number of automakers that use Hyperion. These automakers are on track to have fleets of vehicles distributed throughout the world, starting in 2024, and will continue to grow.

The Mapping leverages Nvidia’s DGX SuperPOD infrastructure to maintain the maps at a global scale. These AI systems ingest terabytes of perception data from the Hyperion vehicles to create and update maps. The broad base of Hyperion vehicles on the road, combined with robust perception, allows vehicles to detect road changes and keep maps fresh.

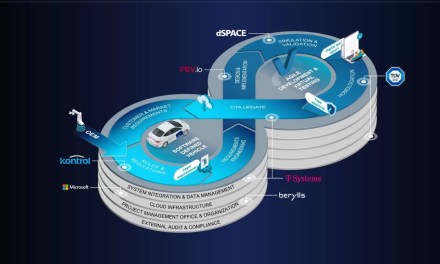

Omniverse Replicator for Drive Sim

In his GTC keynote, Huang announced Omniverse Replicator, an engine for generating synthetic data with ground truth for training AI networks for AV development using Drive Sim. Data generated by Drive Sim is used to train deep neural networks for perception systems composed of two parts: an algorithmic model and the data used to train that model.

Engineers have dedicated significant time to refining algorithms, but Nvidia says that the data side of the equation is still underdeveloped due to the limitations of real-world data that are incomplete as well as time-consuming and costly to collect. The imbalance often leads to a plateau in DNN development, hindering progress where the data cannot meet the demands of the model. With synthetic data generation, developers have more control over data development, tailoring it to the specific needs of the model.

While real-world data are critical components for AV training, testing, and validation, they present significant challenges. The data used to train these networks are collected by sensors on a vehicle fleet during real-world drives.

Once captured, the data must be labeled with ground truth, the annotation done by hand by thousands of labelers. The process is time-consuming, costly, and can be inaccurate. Augmenting real-world data collection with synthetic data removes these bottlenecks while allowing engineers to take a data-driven approach to DNN development, significantly accelerating AV development and improving real-world results.

“We have about 3000 professional labelers creating our training data,” explained Shapiro. “It’s just an enormous task. To help accelerate and improve efficiency, we now are leveraging Omniverse and our Drive Sim software to generate synthetic data. This is a cornerstone of our data strategy. With our new Omniverse Replicator for Drive Sim, we create realistic scenes through simulated cameras with data labeled automatically. This is a great time and cost savings.”

Drive Sim has already produced significant results in accelerating perception development with synthetic data at Nvidia. One example is the migration to the latest Hyperion sensor set. Before AV sensors were available, the Drive team was able to bring up DNNs for the platform using synthetic data. Drive Sim generated millions of images and ground-truth data for training. As a result, the networks were ready to deploy as soon as the sensors were installed, saving valuable months of development time.

Drive Sim on Omniverse will be available to developers via an early access program.

Drive Concierge AI assistant and Chauffeur autonomous driver

In his GTC keynote, Huang also announced two AI platforms to transform the digital experience inside the car. Nvidia says that software-defined vehicles could incorporate two computers built with Drive Orin—one for AI assistants called Drive Concierge, the other for autonomous driving called Drive Chauffeur.

With Concierge, vehicle occupants have access to intelligent services provided by Nvidia’s Drive IX and Omniverse Avatar for real-time conversational AI. The Omniverse Avatar connects speech AI, computer vision, natural language understanding, recommendation engines, and simulation. Avatars created in the platform are interactive characters with ray-traced 3D graphics that can see, speak, and converse on a range of subjects and can understand naturally spoken intent.

“These AIs will enable us to reimagine how we interact with our cars and create a personal concierge in the car that will be on call for you,” said Shapiro. “It will provide a confidence view on the dash showcasing what’s in the mind of the automated driver, aka your chauffeur, so that you will trust the autonomous driving system.”

The technology of Omniverse Avatar enables the Concierge to serve as a digital assistant, helping make recommendations, book reservations, make phone calls, and provide alerts. It’s personalized to each driver and passenger, giving every vehicle occupant their own personal concierge. Occupants will be able to have a natural conversation with a vehicle and use voice to control many functions that previously required physical controls or touch screens.

The Concierge can act as an on-demand valet, parking a car so drivers can easily continue on their way. A guardian function can use interior cameras and multimodal interaction to ensure that driver attention is on the road when necessary.

It is tightly integrated with Drive Chauffeur to provide 360-degree, 4D visualization with low latency so drivers can comfortably become riders and trust the Chauffeur to safely drive. This cooperation between systems enables the Concierge to perform functions such as summoning the vehicle, searching for a parking spot, and reconstructing a 3D surround view of the vehicle.

The Chauffeur AI-assisted driving platform is based on the Drive AV SDK and can handle both highway and urban traffic. It uses the high-performance compute architecture and sensor set of Hyperion 8 to drive from address to address. For those who want to drive, the system provides active safety features and can intervene in dangerous scenarios.

New OEMs onboard

At GTC, performance automaker Lotus, autonomous bus manufacturer QCraft, and EV startup WM Motor announced that they are using Drive Hyperion for their next-generation software-defined vehicles.

They join global automakers such as Mercedes-Benz and Volvo Cars, and EV startups like Nio, as well as Tier 1 suppliers, software startups, sensor makers, and robotaxi companies developing on the high-performance compute Orin. The SOC achieves 254 TOPS (trillion operations per second) and is designed to handle the large number of applications and DNNs (deep neural networks) that run simultaneously in AVs while achieving systematic safety standards such as ISO 26262 ASIL-D.

Lotus is using Drive Orin to develop intelligent human driving technology designed for the track with centralized compute and redundant and diverse DNNs.

Baidu is integrating Drive Orin within its third-generation autonomous driving platform—called the Apollo Computing Unit—to improve driving performance and reduce production costs to accelerate the development of its AVs.

In October, WM Motor announced its flagship M7 electric smart car will feature four Orin SoCs delivering 1016 TOPS of compute performance.

In the mass-transit space, QCraft will adopt Drive Orin for its next-generation Driven-by-QCraft self-driving platform. The intelligent system for offerings from robobuses to robotaxis is expected to start in late 2023.

On the truck side, Kodiak Robotics’ fourth-generation vehicle has a lightweight mapping strategy that uses Drive Orin to detect road objects and signs. Plus has also announced plans to transition to Drive Orin beginning next year for its self-driving system known as PlusDrive.